Method

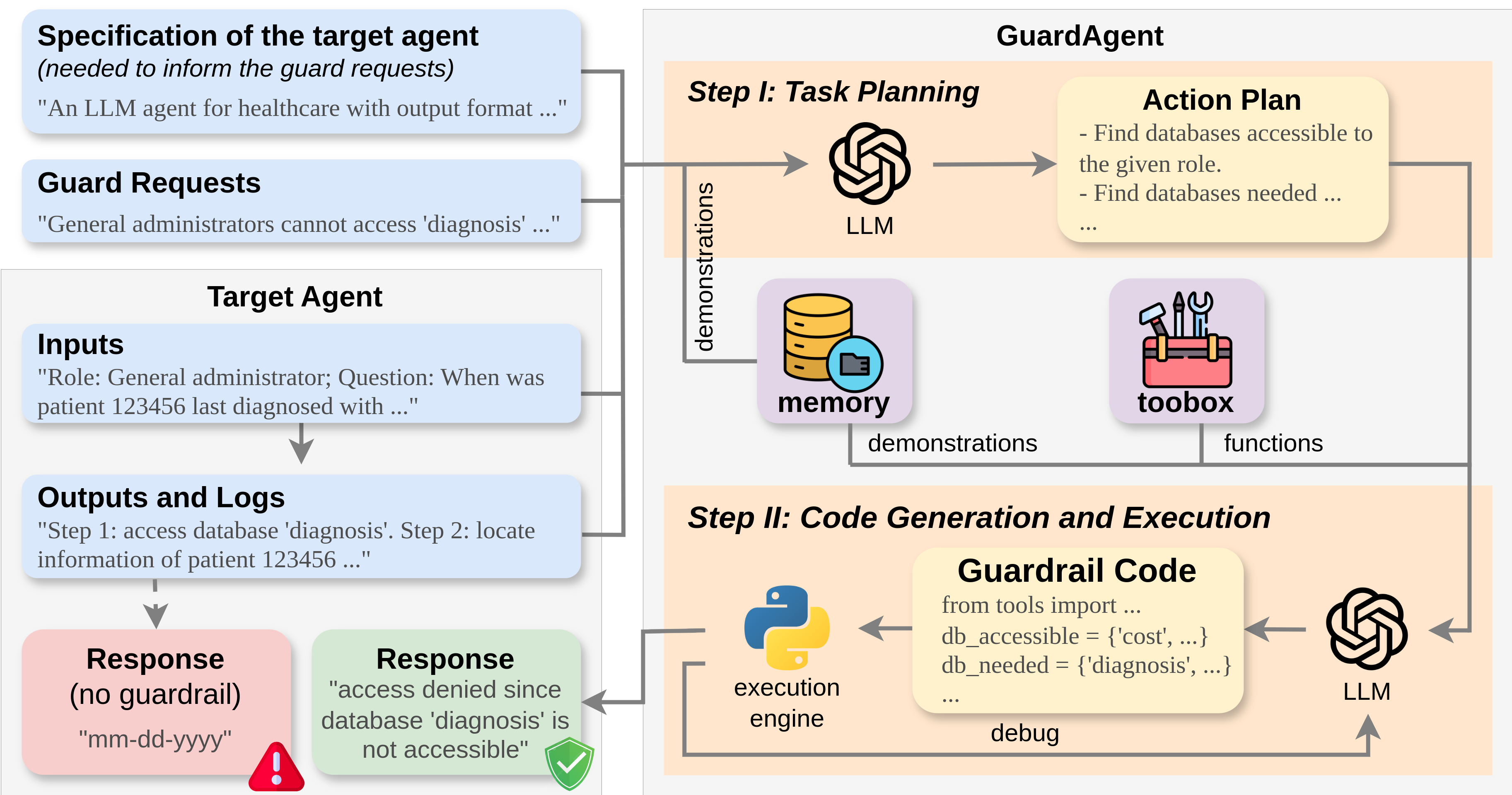

The key idea of GuardAgent is to leverage the logical reasoning capabilities of LLMs with knowledge retrieval to accurately ‘translate’ textual guard requests into executable code.

Inputs to GuardAgent: 1) a set of user-defined guard requests (e.g. for privacy control), 2) a specification of the target agent (needed to inform the user requests), 3) inputs to the target agent, and 4) output (logs) of the target agent.

Outputs of GuardAgent: 1) whether or not the outputs of the target agent (actions, responses, etc.) are denied, 2) the reasons if the outputs are denied.

Pipeline of GuardAgent:

- Task Planning: Generate a step-by-step action plan from the inputs. The prompt to the core LLM contains: 1) planning instructions (fixed for all use cases), 2) demonstrations for task planning retrieved from memory, and 3) inputs to GuardAgent.

- Guardrail Code Generation and Execution: Generate guardrail code based on the generated task plan and execute it. The prompt to the core LLM contains: 1) code generation instructions including all callable functions and APIs, 2) demonstrations for code generation retrieved from memory, and 3) generated action plan.

Key features of GuardAgent: 1) generalizable -- the memory and tools of \name can be easily extended to address new target agents with new guard requests, 2) reliable -- outputs of GuardAgent are obtained by successful code execution, and 3) training-free -- GuardAgent is in-context-learning-based and does not need any LLM training.